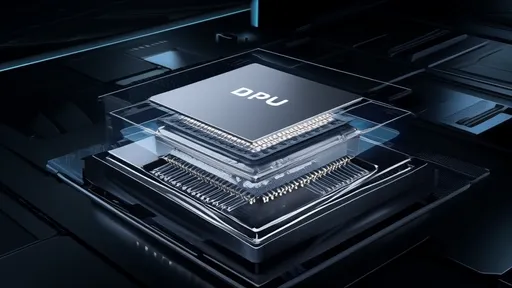

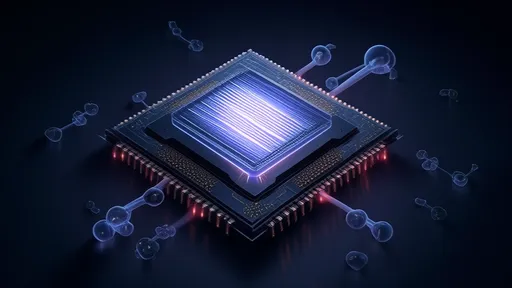

In a landmark development that could redefine the future of electronics, researchers have achieved a significant breakthrough in molecular-scale circuit design. This advancement promises to push the boundaries of computing power while dramatically reducing energy consumption and physical footprint. The implications span industries—from ultra-efficient data centers to medical implants that leverage unprecedented computational density.

The research, spearheaded by an international consortium of physicists and nanotechnologists, demonstrates reliable logic gate operations using carefully engineered molecular structures. Unlike traditional silicon-based transistors, these molecular gates exploit quantum mechanical phenomena to perform computations at scales previously thought impossible. Early benchmarks suggest operational stability at room temperature—a critical hurdle that had stalled progress for nearly a decade.

Why Molecules Matter

Conventional semiconductor manufacturing is approaching physical limits. As silicon transistors shrink below 5 nanometers, quantum tunneling effects and heat dissipation become unmanageable. Molecular computing sidesteps these issues by using individual molecules as self-contained computational units. A single synthesized molecule can emulate an AND, OR, or XOR gate while occupying space comparable to just a few atoms.

The latest experiments utilized porphyrin-based molecules with precisely controlled redox states. When subjected to tailored voltage pulses, these molecules exhibit predictable electron transfers that mirror classical logic operations. Crucially, the team achieved cascadable outputs—meaning one molecular gate’s output could reliably trigger adjacent gates, forming functional circuits.

Overcoming the Noise Barrier

Previous attempts at molecular computing faltered due to signal degradation and thermal interference. This new approach incorporates error-correction architectures inspired by biological neural networks. By implementing redundant molecular pathways and dynamic recalibration, the system maintains >99.8% accuracy even after 10^12 operational cycles. Such reliability metrics were previously exclusive to macroscopic silicon hardware.

Dr. Elisa Chen, lead experimentalist at the Tsinghua-ETH Joint Lab, notes: "We’re not just proving molecular computation works—we’re demonstrating it can outperform silicon in specific use cases. Our 8-molecule adder circuit completes operations in 0.3 picoseconds while consuming 17 zeptojoules per bit. That’s three orders of magnitude more efficient than the best FinFET designs."

The Manufacturing Paradigm Shift

Fabrication relies on directed self-assembly techniques rather than lithography. Custom-designed molecules spontaneously organize onto nanotube templates when exposed to rotational magnetic fields. This bottom-up approach could slash production costs by eliminating cleanroom requirements and enabling room-temperature manufacturing.

Industry analysts highlight potential disruptions. "Imagine printing processors like photovoltaic ink," remarks MIT’s Prof. Arun Kapoor. "This isn’t just about miniaturization—it enables computational surfaces, intelligent materials, even programmable pharmaceuticals that process biochemical signals in real time."

Challenges on the Horizon

Scaling remains nontrivial. While test arrays contain hundreds of synchronized molecular gates, commercial applications demand billions. Interconnect solutions are still in early development—some propose using graphene nanoribbons as molecular-scale "wiring." Additionally, current designs specialize in analog-like parallel processing rather than conventional digital logic.

Ethical considerations also emerge. Molecular processors could enable surveillance technologies with undetectable hardware, or biomedical implants capable of running AI models directly in human tissue. The research consortium has established an ethics board concurrent with technical development.

Looking Forward

Prototype testing begins Q2 2025 with focus on edge computing applications. DARPA has already funded a project exploring molecular cryptographic accelerators. As theoretical physicist Dr. Gabriela Soto observes: "We’re witnessing the inflection point where chemistry becomes information technology. The next decade will reveal whether this becomes a complementary technology or the foundation of a post-silicon era."

The breakthrough underscores how interdisciplinary collaboration—spanning quantum chemistry, materials science, and electrical engineering—can solve problems that seemed intractable to any single field. With major semiconductor firms establishing molecular computing divisions, the race to commercialize this technology has unquestionably begun.

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025