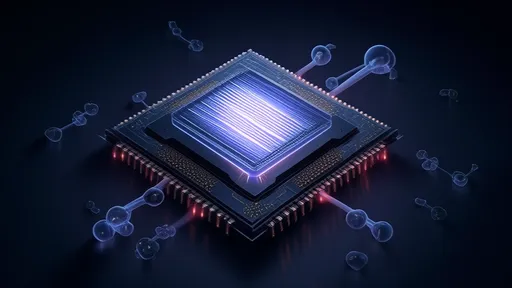

The field of neuromorphic computing has taken a significant leap forward with recent breakthroughs in pulse coding optimization for brain-inspired chips. As researchers strive to bridge the gap between biological neural networks and artificial intelligence systems, the refinement of pulse-based information encoding has emerged as a critical frontier. These developments promise to revolutionize how we process information in energy-efficient computing architectures.

At the core of this advancement lies the fundamental rethinking of how artificial neurons communicate. Traditional artificial neural networks rely on continuous value transmissions, while their biological counterparts use discrete, event-driven spikes. The latest generation of neuromorphic processors has made remarkable progress in mimicking this biological behavior through sophisticated pulse coding schemes. These schemes determine not just when neurons fire, but how the timing, frequency, and patterns of these pulses carry meaningful information.

One particularly promising approach involves adaptive temporal coding mechanisms that dynamically adjust to input stimuli. Unlike fixed coding schemes, these systems can compress or expand their temporal resolution based on the urgency and importance of the information being processed. This mirrors the brain's remarkable ability to prioritize critical sensory inputs while filtering out less important background noise. Early benchmarks show these adaptive systems achieving up to 40% improvements in both energy efficiency and processing speed compared to previous static coding methods.

The optimization of population coding represents another major stride forward. In biological neural networks, information is often distributed across populations of neurons rather than being localized to specific cells. Modern neuromorphic chips are now implementing similar strategies, where the collective firing patterns of neuron groups encode complex information. This approach not only increases fault tolerance but also enables parallel processing capabilities that closely resemble biological systems. Researchers have demonstrated that properly optimized population coding can reduce hardware resource requirements by up to 30% while maintaining or even improving computational accuracy.

Energy efficiency remains a paramount concern in pulse coding optimization. The brain's remarkable energy efficiency - consuming roughly 20 watts while outperforming conventional computers in many cognitive tasks - serves as both inspiration and benchmark. Recent innovations in sparse coding techniques have yielded particularly impressive results in this regard. By ensuring that only the most informative pulses are transmitted, these methods can reduce energy consumption by up to 60% in certain applications. The key breakthrough has been developing coding schemes that maintain information fidelity while dramatically reducing spiking activity.

Perhaps the most exciting development is the emergence of hybrid coding schemes that combine the strengths of multiple approaches. These systems can switch between rate coding, temporal coding, and population coding depending on the nature of the computational task at hand. Early prototypes have shown remarkable versatility, performing equally well on pattern recognition tasks requiring precise timing and more abstract cognitive tasks that benefit from distributed representations. This adaptability suggests we may be approaching neuromorphic systems that can dynamically reconfigure their information encoding strategies much like biological brains do.

The implications of these pulse coding optimizations extend far beyond laboratory benchmarks. In practical applications ranging from edge computing to autonomous systems, the ability to process information more efficiently while consuming less power could enable entirely new categories of intelligent devices. For instance, optimized pulse coding could make real-time, on-device AI processing feasible for wearable health monitors or enable more sophisticated decision-making in resource-constrained environments like space exploration missions.

As the field progresses, researchers are turning their attention to more biologically plausible learning rules that can work in tandem with advanced pulse coding schemes. The combination of optimized information encoding with spike-timing-dependent plasticity and other biologically inspired learning mechanisms could produce neuromorphic systems that not only process information efficiently but also adapt and learn from their environment in ways that more closely resemble biological intelligence.

The road ahead still presents significant challenges, particularly in developing standardized frameworks for comparing different pulse coding approaches and in scaling these techniques to larger, more complex neural networks. However, the rapid pace of innovation in this space suggests that brain-inspired chips with increasingly sophisticated and efficient pulse coding capabilities will play a major role in the next generation of computing architectures.

Looking forward, we can anticipate seeing these optimized neuromorphic processors moving from research labs into practical applications within the next few years. As pulse coding techniques continue to mature, they may well provide the key to unlocking artificial intelligence systems that rival the efficiency, adaptability, and computational power of the human brain - while operating within the strict energy budgets required by mobile and embedded applications.

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025

By /Jul 22, 2025