The healthcare industry stands at the precipice of a technological revolution, one that promises to unlock unprecedented insights into complex diseases like cancer while safeguarding patient privacy. Federated learning, a decentralized machine learning approach, is emerging as a game-changer in training AI models across multiple hospitals without sharing raw patient data. This paradigm shift is particularly crucial in oncology, where collaborative research often clashes with stringent data protection regulations.

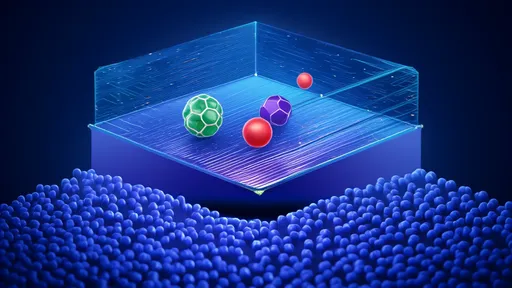

Federated learning represents a fundamental reimagining of how medical AI models are developed. Unlike traditional approaches that require centralizing datasets from various institutions—a process fraught with legal and ethical hurdles—this framework allows hospitals to collaboratively train models while keeping sensitive patient information within their own secure systems. Each participating institution trains the model locally using its own data, sharing only model parameter updates rather than the underlying patient records. The global model then aggregates these updates, gradually improving its performance across all participating sites.

The implications for cancer research are profound. Oncology has long suffered from data fragmentation, with each hospital holding valuable but isolated patient datasets. Small sample sizes at individual institutions often limit the statistical power of research, especially for rare cancer subtypes. Federated learning enables the creation of robust models that learn from diverse patient populations across geographical boundaries while maintaining compliance with regulations like HIPAA in the United States and GDPR in Europe.

Several pioneering initiatives have demonstrated the potential of this approach. The European Union's MELLODDY project, involving pharmaceutical companies and research institutions, has shown promising results in drug discovery. In oncology specifically, researchers have applied federated learning to improve tumor segmentation in radiology images across multiple hospitals. These early successes suggest that the technique could be extended to predictive models for cancer progression, treatment response, and personalized therapy recommendations.

The technical implementation of federated learning in healthcare requires careful consideration of several factors. Hospitals often use different electronic health record systems with varying data formats and standards. Data harmonization becomes crucial—not through centralized pooling, but by establishing common data elements and ontologies that allow models to learn effectively from heterogeneous sources. Additionally, the federated approach must account for imbalances in data quantity and quality across institutions, ensuring that larger hospitals don't disproportionately influence the model at the expense of smaller centers with potentially valuable niche datasets.

Privacy preservation remains the cornerstone of federated learning's value proposition. Advanced cryptographic techniques like differential privacy and secure multi-party computation can be layered onto the basic framework to provide additional safeguards. These methods ensure that even the model updates shared between institutions don't inadvertently reveal sensitive information about individual patients. Recent advances in homomorphic encryption now allow computations to be performed on encrypted data, offering another powerful tool for privacy protection in collaborative cancer research.

The clinical applications of federated tumor models are vast and varied. Predictive algorithms could help oncologists identify high-risk patients who might benefit from more aggressive therapies. Treatment response models could suggest optimal drug combinations based on patterns learned from thousands of similar cases across multiple institutions. Perhaps most exciting is the potential to accelerate rare cancer research by effectively pooling knowledge from scattered cases that would otherwise be statistically insignificant at any single center.

Despite its promise, federated learning faces significant implementation challenges. The computational infrastructure required—including secure communication protocols and coordination mechanisms—can be complex to establish. There are also questions about how to fairly attribute contributions in publications when multiple institutions participate in model development. Legal frameworks need to evolve to clarify liability issues and intellectual property rights in these collaborative models. Perhaps most fundamentally, the healthcare community must build trust in these systems, ensuring that all participants believe in both the privacy protections and the scientific validity of the approach.

The future of federated learning in oncology may involve hybrid approaches that combine its strengths with other privacy-preserving techniques. Some researchers envision a system where certain types of non-sensitive data could be shared more openly, while highly personal information remains strictly protected. Others suggest that federated models could serve as a first pass, identifying promising research directions that could then undergo traditional centralized validation with explicit patient consent for data sharing in specific cases.

As the technology matures, we're likely to see federated learning become standard practice in multi-institutional cancer research. Professional societies and research consortia are beginning to establish best practices and technical standards. Funding agencies are increasingly prioritizing privacy-preserving approaches in their grant requirements. What began as an experimental technique in computer science labs is rapidly becoming an essential tool in the fight against cancer—one that respects patient confidentiality while harnessing the power of collective medical knowledge.

The ethical dimensions of this technology warrant careful ongoing discussion. While federated learning solves many privacy concerns, it doesn't eliminate all ethical questions about AI in healthcare. Issues of algorithmic bias, informed consent for data use, and appropriate clinical validation remain critical. The oncology community must engage with ethicists, patient advocates, and legal experts to ensure these powerful tools are developed and deployed responsibly.

Looking ahead, the integration of federated learning with other emerging technologies could unlock even greater potential. Combining federated tumor models with explainable AI techniques could help clinicians understand and trust the model's recommendations. Linking these approaches with blockchain technology might provide immutable audit trails of model development while preserving privacy. As quantum computing advances, new forms of secure multiparty computation could emerge, further enhancing privacy protections.

The journey toward widespread adoption of federated learning in oncology is just beginning. Early adopters are proving the concept's viability, but much work remains to turn these prototypes into robust clinical tools. What's clear is that the traditional trade-off between data privacy and medical progress is being redefined. In federated learning, we may have found a path that honors our ethical obligations to patients while accelerating the discovery of better cancer treatments—a rare win-win in the complex world of medical research.

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025

By /Jul 18, 2025